Efficiency

I am a fan of efficient processes. When I see potential for process improvement, I find myself drawn to making it better. I’ve done this in a past company I worked for when they did not have a proper defined software development life-cycle. I developed a process within my own teams that I though was better. It certainly felt better than “could someone build this deliverable on their machine and email it to the delivery guy?” Nothing was repeatable. Nothing was automatic. Nothing was tested unless someone remembered to test it. And nothing was guaranteed to work. Sound scary? It was. My proof of concept that I would eventually pitch to the company at large revolved around automation. Automation at this company meant a few upgrades.

The company was on Subversion at the time and this new thing called Git was around that everyone else was using and everyone else found it was better. In one afternoon I copied one of the projects I was leading and converted it to Git while retaining all the history. It was easy to convert. It was certainly faster than I was expecting given how slow Subversion is. And it was simple. I find Occam’s Razor to be a great mediator when arguing with myself. The simplest solution is often the right one. Switching to Git opened up a lot of doors for faster development without the feared SVN Merge Conflict (which happened multiple times a day). Git seemed more efficient in the use of my team’s time. I was sold on Git. My team was sold on Git before I even installed it. How could I get the company to upgrade? I had to show them how awesome it was.

Next up was not having to ask my developers to build a deliverable. If there’s one thing I’ve learned about the inefficiencies of doing anything manual, human error is that thing. Human error exists and can never, ever, ever be mitigated without completely removing it. Jenkins to the rescue! I’ve set up Jenkins in past jobs before so setting it up for a proof-of-concept was not big deal. About 10 minutes later it was up and running on my local computer along side the Git server (I know, I know, bad form! This was before containers people!). Having Git tell Jenkins that a thing was changed was easy. Almost too easy. A few manual builds to work out kinks in the Jenkins build then a few configurations on my local Git server and viola! The Git server was talking to the Jenkins server and a tag triggered a Jenkins build which stored the build artifacts indefinitely. Now, when we wanted to show something, we just cut a tag! (I would later expand this to an automated deployment, but this was a proof-of-concept that I need so I could change a company’s process).

My teams use this process for a few weeks tweaking things as we came across it. We knew (yes by this time the entire team was on board with the new way since it saved so much time) that we only had one chance at this pitch. After a few weeks I ran it by another team local to my office. They wanted in before I had finished the first sentence before I could ask if their team wanted in as a guinea pig. They were on it within an hour.

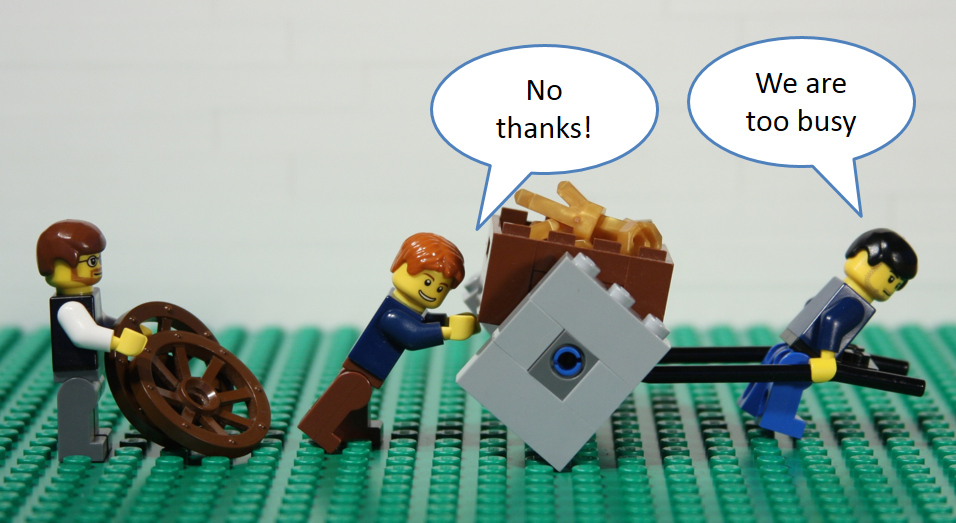

Some times it is better to ask forgiveness than permission. In this case, I asked for a dedicated VM (again, before containers!) located in HQ for my project. I got one after about 2 weeks and started migrating Git and Jenkins to it. Once we were migrated everything was going great! We had buy-in from the entire local office and things seemed to be going well with this out-of-control proof-of-concept-turned-beta project. Interestingly enough, productivity had increased enough that all this non-project-specific work was never noticed. I’m not saying you should go rogue, but I definitely should have pitched this sooner than I did. By this time, I asked for a meeting with the CTO and other Lead Engineers. After we figured out a time we could all be on a Skype call (migrating to Slack is a story for another time) I showed them what we were working on. I showed them Git. I showed them Jenkins. I showed them the entire process from new repository, through the Git Flow branching pattern, to first automated build. Boy am I’m glad this was a Skype call. After the initial “you shouldn’t have done this without permission” speech came the “I’m glad you did though.” They definitely liked what they saw. In my defense (and I believe everyone else in my local office at this point) we had tried to get continuous integration up and running but were continuously shot down. I felt like the poor guy behind the cart in this picture:

So, the IT team in HQ took over the project and I walked them through some of the setup for Git and Jenkins. They, of course, made improvements (LDAP authentication, separate VMs, etc.) when they installed Git and Jenkins.

So, after about 2 months of this entire process, the company had adopted Git and Jenkins for a better solution. Teams were starting to migrate to Git and learn the Git Flow branching pattern. Everything was looking up! It sparked a bit of an overhaul to other processes and happened to fall right in line with their CMMI Level 3 efforts (more on that in another post). Everything seemed right in this process — everything was much more simple.

I’ve learned a lot from this experience. For my own projects I have my Git repositories hosted on GitLab. It uses a build server I host on DigitalOcean. These builds are automatically deployed (within containers!) and freely available. Heck, this site has a GitLab repository with the configurations to repeat deployment. So does my Division Gear discord bot. If you ever find yourself repeatedly building the same things manually, it might be time to fix that.